Have You Been Robbed on the Last Mile of Sales?

It is a fair question, whether you are the seller or the customer. OK, so what is the last mile of sales? I didn't find an official definition, so I'm borrowing the concept from "the last mile of finance" between balance sheet and 10-k, and the "last mile of telecommunications" that is the copper wire from the common carrier's substation to your home or business. Let's call the last mile of sales

that part of the sales funnel in which prospects are ready to become customers, or are already customers, ready for up-selling and cross-selling.

Survey Participants were robbed on the Last Mile of Sales!

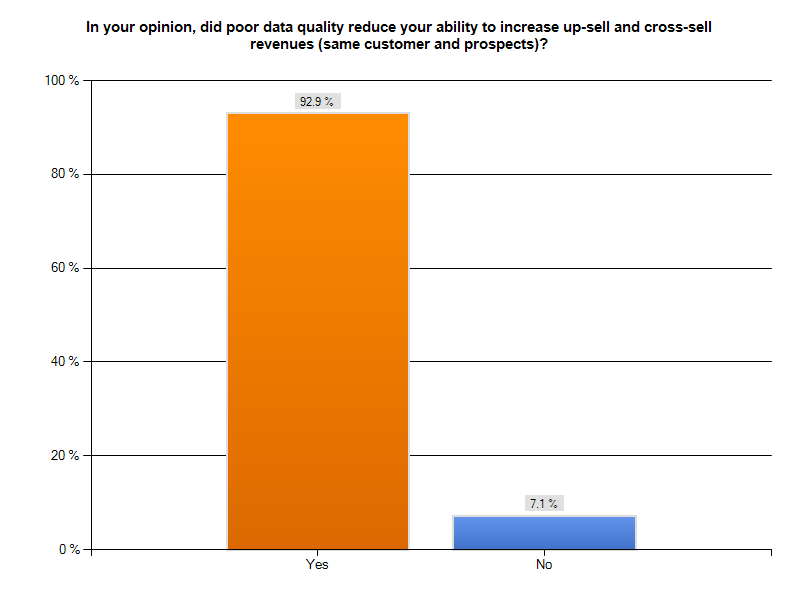

This Tuesday morning, I looked at our "Poor Data Quality - Negative Business Outcomes" survey results and I noticed a surprising agreement among participants in one sales-related area. 126 respondents, or over 90%of those responding to our question about poor data quality compromising up-selling and cross-selling, indicated they had such a problem. The graph following gives you a sense of how large a percentage of respondents had lost sales opportunities. This is a troubling statistic. Organizations spend huge sums on marketing programs designed to attract prospects and nurture them to become customers. Beyond direct monetary investment, ensuring a successful trip down the sales funnel takes time, effort, and ability. From the perspective of the seller, failing to sell more products and services to an existing (presumably happy) client is like being robbed on the last mile of sales. Your organization has already succeeded in making a first sale. Subsequent selling should be easier, not harder. From the perspective of the buyer, losing confidence in your chosen vendor because they fail to know you and your preferences, confuse you with similarly named customers, or display inept record-keeping about their last contact with you, robs you of a relationship you had invested time and money in developing. Perhaps now your go-to vendor becomes your former vendor, and you must spend time seeking an alternate source. Once confidence has been shaken, it is difficult to rebuild.

existing (presumably happy) client is like being robbed on the last mile of sales. Your organization has already succeeded in making a first sale. Subsequent selling should be easier, not harder. From the perspective of the buyer, losing confidence in your chosen vendor because they fail to know you and your preferences, confuse you with similarly named customers, or display inept record-keeping about their last contact with you, robs you of a relationship you had invested time and money in developing. Perhaps now your go-to vendor becomes your former vendor, and you must spend time seeking an alternate source. Once confidence has been shaken, it is difficult to rebuild.

What did the survey say?

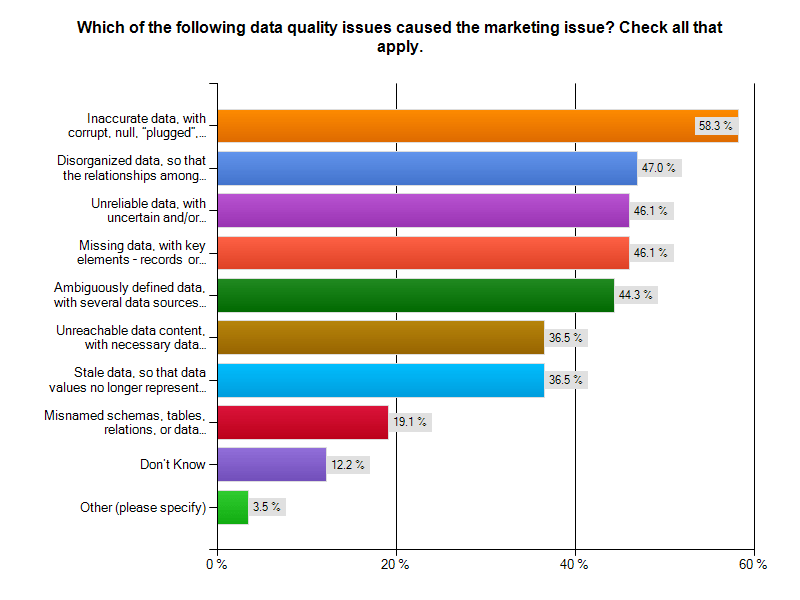

How is it possible that more than 90% of our respondents to this question lost an opportunity to up-sell or cross-sell? The next chart tells the story. It is poor data quality, plain and simple. You can read the results yourself. As a sales prospect for a lead generation service, I had a recent experience with at least one of the top four poor data quality problems.

You can read the results yourself. As a sales prospect for a lead generation service, I had a recent experience with at least one of the top four poor data quality problems.

Oops, the status wasn't updated after our last call

In the closing months of 2013, I was solicited by a lead generation firm. I asked them to contact me in the first quarter of 2014. Ten days into 2014, they called again. OK, perhaps a bit early in the quarter, but they are eager for my business. With no immediate need, I asked them to call me again in Q3-2014 to see how things were evolving. So, I was surprised when I received another call from that firm, yesterday. Had we traveled through a time-warp? Was it now mid-summer? A look out the window at the snowstorm in progress suggested it was still February 2014. The caller was the same person as last time, and began an identical spiel. I interrupted and mentioned we had only spoken a week earlier. The caller appeared to remember and agree, indicating that there was no status update about the previous call. Was this sloppy ball-handling by sales, an IT technology issue, an ill-timed database restore? Was this a 1:1,000,000 chance or an everyday occurrence? The answer to all of those questions is "I have no idea, but I don't want to trust these folks with managing my lead generation campaign". If they can't handle their own sales process, how are they going to help me with mine? What ever the cause of the gaff, they robbed themselves of a prospect, and me of any confidence I might have had in them.The Bottom Line

Being robbed on the last mile of sales by poor data quality is unnecessary, but all too common. Have you recently been robbed on the last mile of sales? Are you a seller, or a disappointed prospect or customer? Cal Braunstein of The Robert Frances Group and I would like to hear from you. Please do contact me to set up an appointment for a conversation. Whether you have already participated in our survey, are a member of the InfoGov community, or simply have an enlightening experience about how poor data quality caused you to have a negative business outcome, reach out and let us know. Published by permission of Stuart Selip, Principal Consulting LLCPredictions: People & Process Trends – 2014

RFG Perspective: The global economic headwinds in 2014, which constrain IT budgets, will force business and IT executives to more closely examine the people and process issues for productivity improvements. Externally IT executives will have to work with non-IT teams to improve and restructure processes to meet the new mobile/social environments that demand more collaborative and interactive real-time information. Simultaneously, IT executives will have to address the data quality and service level concerns that impact business outcomes, productivity and revenues so that there is more confidence in IT. Internally IT executives will need to increase their focus on automation, operations simplicity, and security so that IT can deliver more (again) at lower cost while better protecting the organization from cybercrimes.

As mentioned in the RFG blog "IT and the Global Economy – 2014" the global economic environment may not be as strong as expected, thereby keeping IT budgets contained or shrinking. Therefore, IT executives will need to invest in process improvements to help contain costs, enhance compliance, minimize risks, and improve resource utilization. Below are a few key areas that RFG believes will be the major people and process improvement initiatives that will get the most attention.

Automation/simplicity – Productivity in IT operations is a requirement for data center transformation. To achieve this IT executives will be pushing vendors to deliver more automation tools and easier to use products and services. Over the past decade some IT departments have been able to improve productivity by 10 times but many lag behind. In support of this, staff must switch from a vertical and highly technical model to a horizontal one in which they will manage services layers and relationships. New learning management techniques and systems will be needed to deliver content that can be grasped intuitively. Furthermore, the demand for increased IT services without commensurate budget increases will force IT executives to pursue productivity solutions to satisfy the business side of the house. Thus, automation software, virtualization techniques, and integrated solutions that simplify operations will be attractive initiatives for many IT executives.

Business Process Management (BPM) – BPM will gain more traction as companies continue to slice costs and demand more productivity from staff. Executives will look for BPM solutions that will automate redundant processes, enable them to get to the data they require, and/or allow them to respond to rapid-fire business changes within (and external to) their organizations. In healthcare in particular this will become a major thrust as the industry needs to move toward "pay for outcomes" and away from "pay for service" mentality.

Chargebacks – The movement to cloud computing is creating an environment that is conducive to implementation of chargebacks. The financial losers in this game will continue to resist but the momentum is turning against them. RFG expects more IT executives to be able to implement financially-meaningful chargebacks that enable business executives to better understand what the funds pay for and therefore better allocate IT resources, thereby optimizing expenditures. However, while chargebacks are gaining momentum across all industries, there is still a long way to go, especially for in-house clouds, systems and solutions.

Compliance – Thousands of new regulations took effect on January 1, as happens every year, making compliance even tougher. In 2014 the Affordable Care Act (aka Obamacare) kicked in for some companies but not others; compounding this, the U.S. President and his Health and Human Services (HHS) department keep issuing modifications to the law, which impact compliance and compliance reporting. IT executives will be hard pressed to keep up with compliance requirements globally and to improve users' support for compliance.

Data quality – A recent study by RFG and Principal Consulting on the negative business outcomes of poor data quality finds a majority of users find data quality suspect. Most respondents believed inaccurate, unreliable, ambiguously defined, and disorganized data were the leading problems to be corrected. This will be partially addressed in 2014 by some users by looking at data confidence levels in association with the type and use of the data. IT must fix this problem if it is to regain trust. But it is not just an IT problem as it is costing companies dearly, in some cases more than 10 percent of revenues. Some IT executives will begin to capture the metrics required to build a business case to fix this while others will implement data quality solutions aimed at fixing select problems that have been determined to be troublesome.

Operations efficiency – This will be an overriding theme for many IT operations units. As has been the case over the years the factors driving improvement will be automation, standardization, and consolidation along with virtualization. However, for this to become mainstream, IT executives will need to know and monitor the key data center metrics, which for many will remain a challenge despite all the tools on the market. Look for minor advances in usage but major double-digit gains for those addressing operations efficiency.

Procurement – With the requirement for agility and the move towards cloud computing, more attention will be paid to the procurement process and supplier relationship management in 2014. Business and IT executives that emphasize a focus on these areas can reduce acquisition costs by double digits and improve flexibility and outcomes.

Security – The use of big data analytics and more collaboration will help improve real-time analysis but security issues will still be evident in 2014. RFG expects the fallout from the Target and probable Obamacare breaches will fuel the fears of identity theft exposures and impair ecommerce growth. Furthermore, electronic health and medical records in the cloud will require considerable security protections to minimize medical ID theft and payment of HIPAA and other penalties by SaaS and other providers. Not all providers will succeed and major breaches will occur.

Staffing – IT executives will do limited hiring again this year and will rely more on cloud services, consulting, and outsourcing services. There will be some shifts on suppliers and resource country-pool usage as advanced cloud offerings, geopolitical changes and economic factors drive IT executives to select alternative solutions.

Standardization –More and more IT executives recognize the need for standardization but advancement will require a continued executive push and involvement. In that this will become political, most new initiatives will be the result of the desire for cloud computing rather than internal leadership.

SLAs – Most IT executives and cloud providers have yet to provide the service levels businesses are demanding. More and better SLAs, especially for cloud platforms, are required. IT executives should push providers (and themselves) for SLAs covering availability, accountability, compliance, performance, resiliency, and security. Companies that address these issues will be the winners in 2014.

Watson – The IBM Watson cognitive system is still at the beginning of the acceptance curve but IBM is opening up Watson for developers to create own applications. 2014 might be a breakout year, starting a new wave of cognitive systems that will transform how people and organizations think, act, and operate.

RFG POV: 2014 will likely be a less daunting year for IT executives but people and process issues will have to be addressed if IT executives hope to achieve their goals for the year. This will require IT to integrate itself with the business and work collaboratively to enhance operations and innovate new, simpler approaches to doing business. Additionally, IT executives will need to invest in process improvements to help contain costs, enhance compliance, minimize risks, and improve resource utilization. IT executives should collaborate with business and financial executives so that IT budgets and plans are integrated with the business and remain so throughout the year.

The Butterfly Effect of Bad Data (Part 2)

Last time... Bad Data was revealed to be pervasive and costly In the first part of this two-part post, I wrote about the truly abysmal business outcomes our survey respondents reported in our "Poor Data Quality - Negative Business Outcomes" survey. Read about it here. In writing part 1, I was stunned by the following statistic: 95% of those suffering supply chain issues noted reduced or lost savings that might have been attained from better supply chain integration. The lost savings were large, with 15% reporting missed savings of up to 20%! In this post, I'll have a look at supply chains, and how passing bad data among participants harms participants and stakeholders, and how this can cause a "butterfly effect".

Supply Chains spread the social disease of bad data

A supply chain is a community of "consenting" organizations that pass data across and among themselves to facilitate business functions such as sales, purchase ordering, inventory management, order fulfillment, and the like. The intention is that executing these business functions properly will benefit the end consumer, and all the supply chain participants.In case the Human Social Disease Analogy is not clear...

Human social diseases spread like wildfire among communities of consenting participants. In my college days, the news that someone contracted a social disease was met with chuckles, winks, and a trip to the infirmary for a shot of penicillin. Once, a business analogy to those long-past days might have been learning that the 9-track tape you sent to a business partner had the wrong blocking factor, was encoded in ASCII instead of EBCDIC, or contained yesterday's transactions instead of today's. All of those problems were easily fixed. Just like getting a shot of penicillin. Today, we live in a different world. As we learned with AIDS, a social disease can have pervasive and devastating results for individuals and society. With a communicable disease, the inoculant has its "bad data" encoded in DNA. Where supply chains are concerned, the social disease inoculant is likely to be an XML-encoded transaction sent from one supply chain participant to another. In this case, the "bad transaction" information about the customer, product, quantity, price, terms, due date, or other critical information will simply be wrong, causing a ripple of negative consequences across the supply chain. That ripple is the Butterfly Effect.The BUTTERFLY EFFECT

The basis of the Butterfly Effect is that a small, apparently random input to an interconnected system (like a supply chain) may have a ripple effect, the ultimate impact of which cannot be reasonably anticipated. As the phrase was constructed by Philip Merilees in back in 1972...Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas?According to Martin Christopher, in his 2005 E-Book "Logistics and Supply Chain Management", the butterfly can and will upset the supply chain.

Today's supply chains are underpinned by the exchange of information between all the entities and levels that comprise the complete end-to-end [supply chain] network... The so-called "Bullwhip" effect is the manifestation of the way that demand signals can be considerably distorted as a result of multiple steps in the chain. As a result of this distortion, the data that is used as input to planning and forecasting activities can be flawed and hence forecast accuracy is reduced and more costs are incurred. (Emphasis provided)

Supply Chains Have Learned that Bad Data is a Social Disease

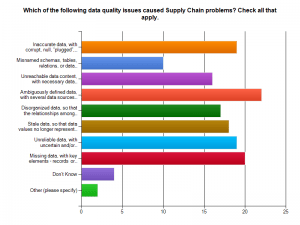

Supply chains connect a network of organizations that collaborate to create and distribute a product to its consumers. Specifically, Investopedia defines a supply chain as:The network created amongst different companies producing, handling and/or distributing a specific product. Specifically, the supply chain encompasses the steps it takes to get a good or service from the supplier to the customerManaging the supply chain involves exchanges of data among participants. It is easy to see that exchanging bad data would disrupt the chain, adding cost, delay, and risk of ultimate delivery failure to the supply chain mix. With sufficient bad data, the value delivered by a managed supply chain would come at a higher cost and risk. Consider the graphic of supply chain management and the problems our survey respondents found in their experiences with supply chains and bad data. Click on the graphics to see them in full size.

Bad data means ambiguously defined data, missing data, and inaccurate data with corrupt or plugged values. These issues lead the list of supply chain data problems found by our survey respondents.

Would you be pleased to purchase a new car delivered with parts that do not work or break because suppliers misinterpreted part specifications? Do you remember the old 1960 era Fords whose doors let snow inside because of their poor fit? Let's not pillory Ford, as GM and Chrysler had their own quality meltdowns too. Supply chain-derived quality issues like these kill revenues and harm consumers and brands.

Would you like to drive the automobile that contained safety components ordered with missing and corrupt data? What about that artificial knee replacement you were considering? Suppose the specifications for your medical device implant had been ambiguously defined and then misinterpreted. Ready to go ahead with that surgery? Bad data is a social disease, and it could make you suffer!

Bad Data is an Expensive Supply Chain Social Disease

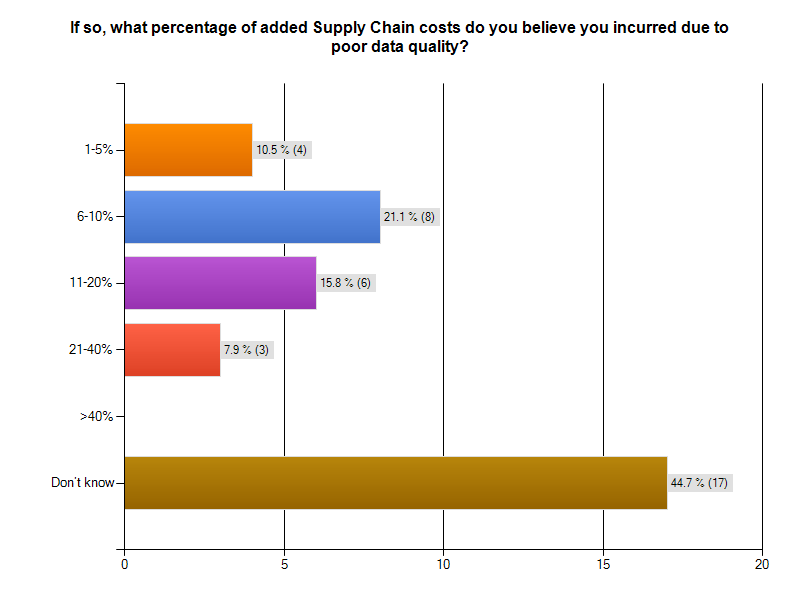

Bad data is costing supply chain participants big money. As the graphic from our survey indicates, more than 20% of respondents to the Supply Chain survey segment thought that data quality problems added between 6% and 10 %. to the cost of operating their supply chain. Almost 16% said data quality problems added between 11% and 20% to supply chain operating costs. That is HUGE! The graphic following gives you survey results. Notice that 44% of the respondents could not monetize their supply chain data problems. That is a serious finding, in and of itself.

THOUGHT EXPERIMENT: CUT SUPPLY CHAIN MANAGEMENT COSTS by 20%

Over 15% of survey respondents with supply chain issues believed bad data added between 11% and 20% to the cost of operating their supply chain.Let's use 20% in our thought experiment, to yield a nice round number.

Understanding the total cost of managing a supply chain is a non-trivial exercise. Industry body The Supply Chain Council has defined The Supply Chain Operations Reference (SCOR®) model. According to that reference model, Supply Chain Management Costs include the costs to plan, source, and deliver products and services. The costs of making products and handling returns are captured in other aggregations.

For a manufacturing firm with a billion dollar revenue stream, the total costs of managing a supply chain will be around 20% of revenue, or $200,000,000 USD. Reducing this cost by 20% would mean an annual saving $40,000,000 USD. That would be a significant savings, for a data cleanup and quality sustenance investment of perhaps $3,000,000. The clean-up investment would be a one-time expense. If the $40,000,000 were a one time savings, the ROI would be 1,3333%.

But wait, it is better than that. The $40,000,000 savings recurs annually. The payback period is measured in months. The ROI is enormous. Having clean data pays big dividends!

If you think the one-time "get it right and keep it right" investment would be more like $10,000,000 USD, your payback period would still be measured in months, not years. Let's add a 20% additional cost or $2,000,000 USD in years 2-5 for maintenance and additional quality work. That means you would have spent $18,000,000 USD in 5 years to achieve a savings $200,000,000. That would be greater than a 10-times return on your money! Not too shabby an investment, and your partners and stakeholders would be saving money too. This scenario is really a win-win situation, right down the line to your customers.

The Corcardia Group believes that total supply chain costs for hospitals approach 50% of the hospital's operating budget. For a hospital with a $60,000,000 USD annual operating budget, a 20% savings means $12,000,000 USD would be freed for other uses, like curing patients and preventing illness.

Even Better...

For manufacturers, hospitals, and other supply chain participants, ridding themselves of bad data will produce still better returns. By cleaning up the data throughout the supply chain, it is likely that costs would go down while margins would improve. The product costs for participants could drop. Firms might realize an additional 5% cost savings from this as well. Their return is even better.What does the Supply Chain Community say about Data Quality?

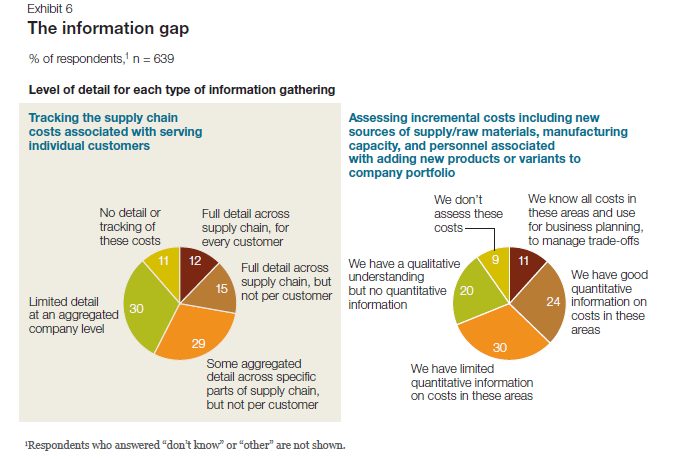

A 2011 McKinsey & Company study entitled McKinsey on Supply Chain: Selected Publications, which you can download here, the publication "The Challenges Ahead for Supply Chains" by Trish Gyorey, Matt Jochim, and Sabina Norton goes right to the heart of a supply chain's dependency on data, and the weakness of current supply chain decision-making based on that data. According to the authors:Knowledge is power The results show a similar disconnection between data and decision making: companies seem to collect and use much less detailed information than our experience suggests is prudent in making astute supply chain decisions (Exhibit 6). For example, customer service is becoming a higher priority,and executives say their companies balance service and cost to serve effectively... Half of the executives say their companies have limited or no quantitative information about incremental costs for raw materials, manufacturing capacity, and personnel, and 41 percent do not track per-customer supply chain costs at any useful level of detail.Here is Exhibit 6 - a graphic from their study, referenced in the previous quote. Andrew White, writing about master data management for the Supply Chain Quarterly in its Q2-2013 issue, underscored the importance of data quality and consistency for supply chain participants.

... there is a growing emphasis among many organizations on knowing their customers' needs. More than this, organizations are seeking to influence the behavior of customers and prospects, guiding customers' purchasing decisions toward their own products and services and away from those of competitors. This change in focus is leading to a greater demand for and reliance on consistent data.White's take-away from this is...

...as companies' growing focus on collaboration with trading partners and their need to improve business outcomes, data consistency—especially between trading partners—is increasingly a prerequisite for improved and competitive supply chain performance. As data quality and consistency become increasingly important factors in supply chain performance, companies that want to catch up with the innovators will have to pay closer attention to master data management. That may require supply chain managers to change the way they think about and utilize data.Did everyone get that? Data Quality and Consistency are important factors in supply chain performance. You want your auto and your artificial knee joint to work properly and consistently, as their designers and builders intended. This means curing existing data social disease victims and preventing the recurrence and spread.

The Bottom Line

At this point, nearly 300 respondents have begun their surveys, and more than 200 have completed them. I urge those who have left their surveys in mid-course to complete them!Bad data is a social disease that harms supply chain participants and stakeholders. Do take a stand on wiping it out. The simplest first step is to make your experiences known to us by visiting the IBM InfoGovernance site and taking our "Poor Data Quality - Negative Business Outcomes" survey.

When you get to the question about participating in an interview, answer "YES"and give us real examples of problems, solutions attempted, success attained, and failures sustained. Only by publicizing the magnitude and pervasiveness of this social disease will we collectively stand a chance of achieving cure and prevention.

As a follow-up next step, work with us to survey your organization in a private study that parallels our public InfoGovernance study. The public study forms a excellent baseline for us to compare and contrast the specific data quality issues within your organization. Data Quality will not be attained and sustained until your management understands the depth and breadth of the problem and its cost to your organization's bottom line.

Contact me here and let us help you build the business case for eliminating the causes of bad data. Published by permission of Stuart Selip, Principal Consulting LLC

Decision-Making Bias – What, Me Worry?

RFG POV: The results of decision-making bias can come back to bite you. Decision-making bias exists and is challenging to eliminate! In my last post, I discussed the thesis put forward by Nobel Laureate Daniel Kahneman and co-authors Dan Lovallo, and Olivier Sibony in their June 2011 Harvard Business Review (HBR) article entitled "Before You Make That Big Decision…". With the right HBR subscription you can read the original article here. Executives must find and neutralize decision-making bias.

The authors discuss the impossibility of identifying and eliminating decision-making bias in ourselves, but leave open the door to finding decision-making bias in our processes and in our organization. Even better, beyond detecting bias you may be able to compensate for it and make sounder decisions as a result. Kahneman and his McKinsey and Co. co-authors state:

We may not be able to control our own intuition, but we can apply rational thought to detect others' faulty intuition and improve their judgment.

Take a systematic approach to decision-making bias detection and correction

The authors suggest a systematic approach to detecting decision-making bias. They distill their thinking into a dozen rules to apply when taking important decisions based on recommendations of others. The authors are not alone in their thinking!

In an earlier post on critical thinking, I mentioned the Baloney Detection Kit. You can find the "Baloney Detection Kit" for grown-ups from the Richard Dawkins Foundation for Reason and Science and Skeptic Magazine editor Dr. Michael Shermer on the Brainpickings.org website, along with a great video on the subject.

Decision-making Bias Detection and Baloney Detection

How similar are Decision-bias Detection and Baloney Detection? You can judge for yourself by looking at the table following. I’ve put each list in the order that it was originally presented, and made no attempt to cross-reference the entries. Yet it is easy to see the common threads of skepticism and inquiry. It is all about asking good questions, and anticipating familiar patterns of biased thought. Of course, basing the analysis on good quality data is critical!

Decision-Bias Detection and Baloney Detection Side by Side

|

Decision-Bias Detection

|

Baloney Detection

|

|---|---|

| Is there any reason to suspect errors driven by your team's self-interest? | How reliable is the source of the claim |

| Have the people making the decision fallen in love with it? | Does the source of the claim make similar claims? |

| Were there any dissenting opinions on the team? | Have the claims been verified by someone else (other than the claimant?) |

| Could the diagnosis of the situation be overly influenced by salient analogies? | Does this claim fit with the way the world works? |

| Have credible alternatives been considered? | Has anyone tried to disprove the claim? |

| If you had to make this decision again in a year, what information would you want, and can you get more of it now? | Where does the preponderance of the evidence point? |

| Do you know where the numbers came from | Is the claimant playing by the rules of science? |

| Can you see the "Halo" effect? (the story seems simpler and more emotional than it really is." | Is the claimant providing positive evidence? |

| Are the people making the recommendation overly attached to past decisions? | Does the new theory account for as many phenomena as the old theory? |

| Is the base case overly optimistic? | Are personal beliefs driving the claim? |

| Is the worst case bad enough? | ---------------------------- |

| Is the recommending team overly cautious? | ---------------------------- |

Conclusion

While I have blogged about the negative business outcomes due to poor data quality, good quality data alone will not save you from the decision-making bias of your recommendations team. When good-quality data is miss-applied or miss-interpreted, absent from the decision-making process, or ignored due to personal "gut" feelings, decision-making bias is right there, ready to bite you Stay alert, and stay skeptical!

reprinted by permission of Stu Selip, Principal Consulting LLC